Trends in the Web Scraping Industry

Most brands struggle a lot to stay visible on social media. Posts go out regularly. Ads run. Engagement numbers look encouraging on dashboards. Yet, when it comes to real conversations, the inbox often stays silent.

This gap frustrates many marketing teams. Likes rarely turn into questions. Comments may not convert into follow-ups. Manual outreach feels slow, and scaling one-to-one conversations across platforms feels unrealistic.

Direct messages sit right at the centre of this missed opportunity. They are personal by design, but difficult to manage consistently. AI-powered DM marketing helps bridge that gap, making it easier to start meaningful conversations before interest quietly fades away.

Public feeds have become crowded spaces. Sponsored posts compete with friends, creators, and trending content, often within a few seconds of scrolling. Even strong creative people struggle to hold attention for long.

Private messaging works differently. It feels quieter and more intentional. Users are more open to responding when communication is direct and relevant. According to Salesforce’s State of the Connected Customer, customers increasingly expect brands to understand their needs and engage with them in a personalised way.

This shift has changed how marketing performs. Instead of broadcasting messages to everyone, brands are learning to invite dialogue. Direct messages allow that exchange to happen naturally, one conversation at a time, without competing for attention in the public feed.

What makes DMs powerful is not just privacy, but context. Messages arrive in a space users already associate with conversation, not promotion. There is no audience watching, no pressure to react publicly.

This changes how people respond. Questions feel easier to ask. Curiosity shows up more clearly. Users who would never comment on a post may still reply to a message. Over time, these small exchanges build familiarity.

For brands, this means intent becomes visible earlier. Instead of guessing interest from likes or views, teams can learn directly from conversations. That clarity often shortens the path between awareness and action.

Smart DM automation is often misunderstood as mass messaging. In practice, effective systems work in the opposite direction. They focus on relevance, timing, and restraint.

AI analyses publicly available signals across platforms such as Instagram, Reddit, Facebook, X (Twitter), Pinterest, and YouTube. These signals include interaction patterns, content engagement, hashtags, and community participation. From this data, the system identifies users who are more likely to be interested in a specific topic or offering.

Engagement then unfolds gradually. Messages reference relevant interests. Likes and follows happen naturally. Links are shared only when context supports them. Nothing feels rushed.

Relu’s automation approach is built around this principle. Actions are paced, varied, and aligned with platform guidelines. The goal is not volume, but consistency. Not speed, but relevance. When done well, the automation stays in the background while conversations take centre stage.

One common concern around automation is the fear of sounding robotic. That fear is understandable, especially when automation is used without intent. Poorly designed systems often reinforce it.

Thoughtful automation does the opposite. AI handles repetition, but decision-making remains grounded in human logic. Message tone adapts based on platform behaviour and user response. When someone replies, the conversation slows down and becomes more personal.

This balance allows brands to scale outreach without flattening their voice. At the same time, teams regain time. Instead of manually tracking multiple platforms, marketers can focus on refining messaging, responding meaningfully, and learning from conversations as they unfold.

The impact of smart DM automation rarely appears overnight. In many cases, it becomes visible only after brands step back and observe how interactions change.

Replies feel more natural. Conversations last longer. Users ask follow-up questions instead of ignoring messages. Over time, these signals reshape how social channels are used.

Smart DMs allow brands to reach a large number of relevant users without sounding repetitive. Because outreach is interest-driven, messages feel selective rather than broadcasted.

This helps brands stay visible without adding to the noise users already filter out instinctively.

DM engagement grows through small interactions. A reply here. A question there. These moments create familiarity, which often leads to stronger responses later.

Private conversations also give users space to respond honestly, without public pressure or performance.

Direct messages reveal intent more clearly than likes or impressions. Brands begin to see which platforms support deeper engagement and which messages invite dialogue.

These insights come from behaviour, not assumptions. They help teams refine tone, timing, and focus with greater confidence.

Automation reduces repetitive outreach while preserving intent. Teams spend less time sending messages and more time responding thoughtfully.

Over time, this balance improves efficiency and results, without sacrificing authenticity.

The value of AI-driven DM automation becomes clearer when viewed through real-world application. In one such case, an automated DM tool was used to manage outreach across multiple social platforms without increasing manual effort.

Instead of relying on broad messaging, the system focused on identifying relevant user signals and initiating conversations where interest was already present. Engagement unfolded gradually, with messages and interactions paced to match each platform’s natural behaviour.

What stood out was the consistency. Outreach continued steadily across platforms while maintaining a human tone. Conversations felt contextual rather than forced, allowing brands to stay present without overwhelming users or violating platform norms.

This example highlights how AI-driven DM tools can support scalable outreach while keeping interactions relevant and compliant. You can explore the full case study here.

Social media engagement is steadily moving away from public feeds and toward private conversations. Direct messages have become a central space where trust forms and questions are asked.

AI-powered DM automation allows these conversations to scale without losing relevance or tone. When built responsibly, it supports personalisation, efficiency, and genuine engagement.

Tools designed with smart logic, like Relu’s, help brands stay visible while remaining aligned with platform expectations and real user behaviour.

Marketing today isn't just limited to being seen. However, it is more about being invited into a conversation. Brands benefit more if consumers spend more time messaging and reduce interactions with traditional advertisements.

AI powered direct messages enable communication without calling out for attention. Brands can develop trust, learn from their audience, and transform interest into enduring relationships by engaging in thoughtful interactions one at a time.

Conversational social media marketing is the future. AI automation can simplify large-scale conversations and benefit organisations.

Most people don’t struggle with understanding LinkedIn. They struggle with keeping up.

You log in with good intentions. You scroll for a few minutes. You like a post or two. Then work pulls you away. Days pass. Sometimes weeks. When you return, your reach feels lower than before, even though your profile hasn’t changed.

That drop is frustrating, especially when you know visibility comes from regular activity. Liking posts, commenting thoughtfully, staying present. The issue isn’t motivation. It’s time. Few professionals can realistically spend hours each week just staying active on LinkedIn.

This gap between knowing what works and actually doing it is where automation starts to matter. Not to replace effort, but to support it. When used carefully, smart tools help professionals stay consistent without turning LinkedIn into a daily burden.

As LinkedIn grew, so did the pressure to stay active. What once worked with occasional posts and manual comments now demands far more consistency. For many professionals, that expectation quietly became a source of stress rather than opportunity.

Daily likes, regular comments, and ongoing interaction help posts travel further on LinkedIn. Most users understand this. The challenge is sustaining it alongside real work. Engagement often slips between meetings and deadlines.

Over time, this inconsistency shows up as reduced reach and fewer meaningful interactions.

This gap between knowing what works and having the time to do it is what pushed automation into the spotlight. Early tools earned a poor reputation because they focused on volume over relevance. Spammy behaviour shaped how people viewed automation for years.

That perception still exists, but it no longer reflects how modern tools operate.

Today’s engagement tools are designed around context. Instead of pushing activity everywhere, they focus on where interaction actually makes sense. They observe patterns and identify relevant posts. Additionally, they support timely engagement, not forced.

The goal is not to constantly appear active. But it is to stay visible during the appropriate moments.

At its best, automation doesn’t replace authenticity. It protects it. Smart tools free up time for productive responses as they handle repetitive tasks in the background, leading to honest conversations.

Used carefully, automation becomes less about speed and more about consistency without burnout.

It becomes easy to remain active on LinkedIn when engagement is driven by specific intent. This is where trigger-based automation starts to feel useful, instead of overwhelming.

Instead of reacting to whatever appears in the feed, Triggify works around predefined triggers. These triggers can be keywords, specific profiles, or certain types of posts. When relevant activity appears, the system responds automatically.

The result is engagement that feels timely and aligned. You are present where it matters, without constantly monitoring the platform yourself.

Triggers help narrow focus. Rather than spreading attention thin across unrelated content, engagement stays connected to topics and conversations that match your goals. This increases the chance that interactions feel natural, not random.

Over time, this consistency improves visibility. People begin to recognise your presence around specific themes, which strengthens positioning without extra effort.

Triggify’s approach supports steady engagement without demanding daily manual input. By keeping actions human-like and compliant, it helps professionals stay visible while protecting their time and energy.

This approach is not just theoretical. It has already been applied in real working environments where consistency was difficult to maintain manually.

One such example can be seen in how Triggify itself was used to support steady LinkedIn growth through trigger-based engagement. By focusing on relevant keywords, profiles, and post types, the platform was able to stay active without relying on constant manual effort. Engagement remained aligned with intent, and visibility improved gradually over time rather than through sudden spikes.

The full breakdown of this approach is shared in this Triggify LinkedIn growth case study, which explains how automation can support consistency while keeping interactions natural and compliant.

Automation often sounds far more complex than it really is. In practice, most smart engagement tools focus on a few simple actions, repeated carefully over time.

One of the most common tasks automation handles is liking posts based on set conditions. These conditions might relate to keywords, industries, or specific profiles. Instead of manually checking the feed, the tool quietly tracks relevant content and responds when it appears.

This keeps engagement consistent, even when attention shifts elsewhere during the day.

Smart tools also help monitor activity across the platform. They scan posts daily and surface discussions that align with defined interests. This makes it easier to engage while conversations are still active, rather than joining too late.

Timing plays a big role here. Early interaction often feels more natural and visible.

Automation becomes most valuable when engagement needs to happen across many posts. Likes, light interactions, and profile visits can occur without feeling sudden or forced.

Well-designed tools spread actions out over time. This pacing helps activity appear natural and avoids overwhelming other users.

Compliance is built into thoughtful automation. Smart tools respect platform limits and behavioural patterns. They avoid aggressive bursts and follow predictable rhythms, which helps protect accounts from restrictions.

In the end, automation does not replace judgment. It replaces repetition. The human still decides where to focus and when to step in. The tool simply ensures presence doesn’t disappear when attention moves elsewhere.

For many professionals, LinkedIn growth still feels abstract. Post regularly. Engage more. Be visible. The advice is familiar, but the execution rarely fits into a busy schedule.

This is where engagement automation starts to matter beyond convenience. It supports personal branding in a way that doesn’t demand constant attention. Instead of reacting sporadically, professionals can stay present in conversations that align with their interests or industry.

Founders often use this approach to remain visible while focusing on building their business. Marketers rely on it to maintain steady engagement without splitting attention across dozens of profiles. Creators benefit from consistent interaction that reinforces their presence around specific themes.

What makes this shift important is balance. Automation works best when it handles repetition, not relationships. It creates space for human responses, thoughtful comments, and genuine follow-ups. When used with restraint, it supports credibility rather than replacing effort.

LinkedIn favours interaction over mere visibility. Professionals who combine intent with consistency tend to be the winners.

LinkedIn growth today depends less on posting volume. Moreover, it favours steady and relevant engagement. Staying visible no longer means being online all day. Trigger-based automation helps professionals interact where it matters most.

When engagement is guided by intent, it feels more natural and sustainable. Using innovative tools smartly supports consistency while leaving room for genuine interaction.

LinkedIn success does not come from working harder, but from working smarter. Automation, when applied thoughtfully, amplifies effort instead of replacing it. Tools like Triggify show how consistency and relevance can coexist without burnout.

As LinkedIn continues to reward meaningful interaction, professionals who balance smart systems with human judgment will be better positioned to grow, connect, and stay visible over time.

Most brands struggle a lot to stay visible on social media. Posts go out regularly. Ads run. Engagement numbers look encouraging on dashboards. Yet, when it comes to real conversations, the inbox often stays silent.

This gap frustrates many marketing teams. Likes rarely turn into questions. Comments may not convert into follow-ups. Manual outreach feels slow, and scaling one-to-one conversations across platforms feels unrealistic.

Direct messages sit right at the centre of this missed opportunity. They are personal by design, but difficult to manage consistently. AI-powered DM marketing helps bridge that gap, making it easier to start meaningful conversations before interest quietly fades away.

Public feeds have become crowded spaces. Sponsored posts compete with friends, creators, and trending content, often within a few seconds of scrolling. Even strong creative people struggle to hold attention for long.

Private messaging works differently. It feels quieter and more intentional. Users are more open to responding when communication is direct and relevant. According to Salesforce’s State of the Connected Customer, customers increasingly expect brands to understand their needs and engage with them in a personalised way.

This shift has changed how marketing performs. Instead of broadcasting messages to everyone, brands are learning to invite dialogue. Direct messages allow that exchange to happen naturally, one conversation at a time, without competing for attention in the public feed.

What makes DMs powerful is not just privacy, but context. Messages arrive in a space users already associate with conversation, not promotion. There is no audience watching, no pressure to react publicly.

This changes how people respond. Questions feel easier to ask. Curiosity shows up more clearly. Users who would never comment on a post may still reply to a message. Over time, these small exchanges build familiarity.

For brands, this means intent becomes visible earlier. Instead of guessing interest from likes or views, teams can learn directly from conversations. That clarity often shortens the path between awareness and action.

Smart DM automation is often misunderstood as mass messaging. In practice, effective systems work in the opposite direction. They focus on relevance, timing, and restraint.

AI analyses publicly available signals across platforms such as Instagram, Reddit, Facebook, X (Twitter), Pinterest, and YouTube. These signals include interaction patterns, content engagement, hashtags, and community participation. From this data, the system identifies users who are more likely to be interested in a specific topic or offering.

Engagement then unfolds gradually. Messages reference relevant interests. Likes and follows happen naturally. Links are shared only when context supports them. Nothing feels rushed.

Relu’s automation approach is built around this principle. Actions are paced, varied, and aligned with platform guidelines. The goal is not volume, but consistency. Not speed, but relevance. When done well, the automation stays in the background while conversations take centre stage.

One common concern around automation is the fear of sounding robotic. That fear is understandable, especially when automation is used without intent. Poorly designed systems often reinforce it.

Thoughtful automation does the opposite. AI handles repetition, but decision-making remains grounded in human logic. Message tone adapts based on platform behaviour and user response. When someone replies, the conversation slows down and becomes more personal.

This balance allows brands to scale outreach without flattening their voice. At the same time, teams regain time. Instead of manually tracking multiple platforms, marketers can focus on refining messaging, responding meaningfully, and learning from conversations as they unfold.

The impact of smart DM automation rarely appears overnight. In many cases, it becomes visible only after brands step back and observe how interactions change.

Replies feel more natural. Conversations last longer. Users ask follow-up questions instead of ignoring messages. Over time, these signals reshape how social channels are used.

Smart DMs allow brands to reach a large number of relevant users without sounding repetitive. Because outreach is interest-driven, messages feel selective rather than broadcasted.

This helps brands stay visible without adding to the noise users already filter out instinctively.

DM engagement grows through small interactions. A reply here. A question there. These moments create familiarity, which often leads to stronger responses later.

Private conversations also give users space to respond honestly, without public pressure or performance.

Direct messages reveal intent more clearly than likes or impressions. Brands begin to see which platforms support deeper engagement and which messages invite dialogue.

These insights come from behaviour, not assumptions. They help teams refine tone, timing, and focus with greater confidence.

Automation reduces repetitive outreach while preserving intent. Teams spend less time sending messages and more time responding thoughtfully.

Over time, this balance improves efficiency and results, without sacrificing authenticity.

The value of AI-driven DM automation becomes clearer when viewed through real-world application. In one such case, an automated DM tool was used to manage outreach across multiple social platforms without increasing manual effort.

Instead of relying on broad messaging, the system focused on identifying relevant user signals and initiating conversations where interest was already present. Engagement unfolded gradually, with messages and interactions paced to match each platform’s natural behaviour.

What stood out was the consistency. Outreach continued steadily across platforms while maintaining a human tone. Conversations felt contextual rather than forced, allowing brands to stay present without overwhelming users or violating platform norms.

This example highlights how AI-driven DM tools can support scalable outreach while keeping interactions relevant and compliant. You can explore the full case study here.

Social media engagement is steadily moving away from public feeds and toward private conversations. Direct messages have become a central space where trust forms and questions are asked.

AI-powered DM automation allows these conversations to scale without losing relevance or tone. When built responsibly, it supports personalisation, efficiency, and genuine engagement.

Tools designed with smart logic, like Relu’s, help brands stay visible while remaining aligned with platform expectations and real user behaviour.

Marketing today isn't just limited to being seen. However, it is more about being invited into a conversation. Brands benefit more if consumers spend more time messaging and reduce interactions with traditional advertisements.

AI powered direct messages enable communication without calling out for attention. Brands can develop trust, learn from their audience, and transform interest into enduring relationships by engaging in thoughtful interactions one at a time.

Conversational social media marketing is the future. AI automation can simplify large-scale conversations and benefit organisations.

Feeling overwhelmed by guest reviews? Discover the 5 clear signs your Airbnb business needs automation — and how Relu’s smart review management system helps hosts save time, boost ratings, and grow faster.

That’s how burnout begins.

Sarah, who manages four Airbnb properties, knows it well — she missed her daughter’s soccer game because she was replying to a 2-star review.

The emotional toll is heavy: checking your phone at dinner, refreshing the Airbnb app every hour, and losing sleep over one negative comment.

Hosts spend an average of 15–20 hours each week managing reviews manually. As your business grows, those hours multiply — while your free time, focus, and peace of mind vanish.

The truth? You can’t scale your hosting business while staying stuck in manual mode.

Here are five unmistakable signs it’s time to automate your Airbnb review management before it becomes overwhelming. And that’s where Relu comes in, helping hosts like Sarah save time by automating review responses, insights, and guest communication.

Every “quick peek” at your reviews adds up.

Between checking multiple platforms, replying, tracking, and cross-referencing, you lose 2–3 hours daily.

Jake, a six-property host, confessed:

“I check reviews before my morning coffee, during lunch, and before bed. It’s exhausting.”

Once you cross three properties, manual review handling becomes a time trap. Hosts who spend over two hours daily on feedback management hit a ceiling — they simply can’t scale.

Relu’s solution changes that completely.

Its centralized dashboard pulls every review from Airbnb, Booking.com, and Google into one place — so you respond once, not three times.

Hosts using Relu’s solution report saving 12–15 hours a week, scaling to 10+ properties up to 40% faster, and finally focusing on what matters most — their guests and growth.

Reviews aren’t just ratings but they’re business insights.

But when feedback is scattered across platforms, vital details slip through.

Small issues like weak Wi-Fi or leaky taps can quietly drag your ratings down.

Tom learned this the hard way: repeated AC complaints buried in Booking.com reviews dropped his 4.9 rating to 4.6 — costing him bookings and his Superhost badge.

That’s where Relu’s AI-powered sentiment analysis steps in.

It reads between the lines, identifying recurring pain points and opportunities you might miss manually.

Hosts using Relu’ solution recover an average of 0.2–0.3 stars in their ratings within three months — just by acting on insights they couldn’t previously see.

As soon as more than one person starts handling reviews, chaos creeps in.

Duplicate replies. Missed messages. Conflicting tones.

Without a system, teams waste hours each week figuring out who should respond and guests can sense the inconsistency.

Relu solves this with built-in collaboration tools that let you assign, approve, and monitor responses in real time.

Everyone sees what’s been handled, what’s pending, and what needs escalation.

Consistent, timely replies aren’t just efficient but they build trust. Hosts using Relu maintain a uniform brand voice and reduce guest confusion by over 70%.

Without structured analytics, hosts operate in the dark.

Manual tracking captures barely 20–30% of recurring patterns, leaving you guessing why one property outperforms another.

Are guests happier with your coastal apartment than your city loft? Is your cleanliness score dipping every summer? You can’t fix what you can’t measure.

Hosts who track review trends gain pricing power since properties with 4.8+ ratings charge 15–20% higher rates on average.

Relu’s analytics dashboard gives you that missing visibility.

It groups feedback by sentiment, category, and location — showing you exactly what guests love (and what needs fixing).

Hosts who use Relu’s insights often find patterns they never noticed before from seasonal dips to recurring mentions of check-in ease or decor.

Armed with data, they raise satisfaction scores, adjust pricing, and stay ahead of guest expectations.

Late replies, typos, and emotional responses happen, especially when you’re tired.

One poor response can go public instantly.

David, a California host, once replied defensively at 2 AM. His comment went viral and bookings dropped by 30%.

Relu prevents that spiral.

With tone-controlled templates, approval workflows, and scheduled responses, every message stays polished and professional, even under pressure.

Hosts say it best: “Relu keeps my tone consistent and my stress low. I never wake up worried about what I said at midnight.”

Manual review management doesn’t just cost time but also growth.

Quantifiable Losses:

Opportunity Costs:

Relu flips that equation.

With automation, hosts reclaim hours, increase their response rate, and unlock opportunities that were buried under manual work.

Relu’s solution isn’t just another review tool but an intelligent management system designed specifically for Airbnb and short-term rental hosts.

It centralizes every review, tracks sentiment, and highlights what matters most so you can take action before issues affect your reputation.

As one host puts it:

“Within two weeks, I stopped checking my phone obsessively. My rating went from 4.7 to 4.9 and I finally got my weekends back.”

Relu doesn’t just automate reviews — it amplifies your business and gives you your life back.

Within 2–4 weeks, you’ll notice faster responses, higher ratings, and more peace of mind.

Hosting should be fulfilling, not fatiguing.

Reviews are the heartbeat of your business, but managing them shouldn’t drain your time, energy, or joy.

Automation isn’t just a convenience; it’s the bridge between surviving and thriving in the hospitality space.

And Relu provides you that bridge. It is the all-in-one automation partner helping hosts regain control, enhance guest relationships, and scale sustainably.

Because in 2025’s competitive rental landscape, success belongs to the hosts who evolve —

those who choose smart systems over manual stress, and automation over anxiety.

If three or more of these signs sound familiar, it’s time to take the leap.

With Relu, you won’t just manage reviews —

👉 You’ll master growth.

Discover how healthcare organizations can save millions and improve care by adopting centralized data management. Learn from a real EMR migration case that proves how data centralisation enhances security, efficiency, and patient outcomes.

Imagine a hospital where every department operates in isolation — lab results in one system, prescriptions in another, and imaging reports buried in a third.

Now think of the confusion that happens when a patient walks in and the doctor doesn’t have access to their complete history.

That’s not a rare scenario — it’s the everyday reality for many healthcare organizations.

According to McKinsey, 20–25% of the total healthcare spending (nearly $1 trillion), is wasted annually due to inefficiencies, and up to $750 billion could be saved through better data utilization

The culprit? Fragmented patient data is spread across multiple platforms that don’t talk to each other.

The result? Lost time, redundant work, and compromised care.

But there’s good news — centralized data management is turning this story around.

Here are the five hidden costs of fragmented patient data that quietly erode the performance and reliability of healthcare organizations.

When clinicians can’t access a patient’s full record, they often repeat tests that have already been performed. This duplication may seem minor, but across thousands of cases, it becomes a massive hidden expense.

The average duplicate rate for a single hospital is between 5-10%. Healthcare organizations that create just 5 duplicates daily spend $78,000 a year on hidden operational costs at $50 per duplicate pair. Fragmented data doesn’t just waste money — it damages confidence between patients and providers.

Every disconnected system adds an extra step for staff. Healthcare professionals often spend hours reconciling mismatched data, re-entering information, or chasing missing details.

These inefficiencies create a ripple effect — longer patient queues, increased staff frustration, and reduced focus on clinical priorities.

Incomplete or inconsistent data can directly impact patient safety. Without access to accurate medical histories, healthcare providers need to make decisions based on partial information.

When patient data is fragmented, every handoff becomes a risk point — increasing the likelihood of human error and clinical oversight.

Data fragmentation doesn’t just slow operations — it weakens security. Every disconnected database is another door left open for potential breaches.

Beyond financial loss, breaches cause lasting reputational damage and patient distrust, both of which are harder to repair than any balance sheet.

Behind every healthcare system lies a patchwork of outdated technologies.

Managing multiple platforms consumes resources that could otherwise drive innovation.

This hidden cost isn’t visible on a balance sheet — but it silently drains both financial and human capital across the organization.

Fragmented patient data isn’t just an operational nuisance — it’s a barrier to safe, efficient, and intelligent care. Centralized data management solves this by connecting every record, report, and insight into a single, secure environment, transforming scattered information into a continuous, actionable flow.

By linking systems, departments, and locations, hospitals can eliminate inefficiencies, reduce risk, and empower smarter decisions — turning data into a true asset rather than a source of waste. Here is how….

Why it matters: When patient information is scattered across systems, duplication, delays, and errors are inevitable. A unified record connects all data points, giving clinicians a complete, accurate view of each patient.

Impact in Action:

A single source of truth creates a connected foundation, ensuring every interaction is precise and every patient is safer.

Why it matters: Manual data entry and reconciliation break up workflows and consume hours of staff time. Automation links disparate systems, cleaning, validating, and standardizing records so teams spend less time chasing data and more time on care.

Impact in Action:

Automation connects workflows across departments, transforming staff from data wranglers into patient care champions.

Why it matters: Fragmented systems slow collaboration and decision-making. A centralized database links clinicians, departments, and facilities in real time, giving every provider an accurate, current view of patient information.

Impact in Action:

Real-time access ensures data flows continuously, shifting healthcare from reactive to proactive.

Why it matters: Scattered data weakens security and compliance. Centralized cloud storage connects access, monitoring, and encryption, making protection an integrated part of the system.

Impact in Action:

Centralized security links protection and accessibility, turning safety into a built-in strength rather than an afterthought.

Why it matters: Outdated, disconnected systems slow innovation and inflate costs. Centralization links operational processes and analytics, giving hospitals a single platform for insights, predictive care, and digital transformation.

Impact in Action:

That’s the true power of centralized data management: connection in action, transforming fragmented healthcare into a seamless, intelligent, patient-centered system.

What was once a web of disconnected systems became an intelligent, secure ecosystem that put patients at the center of every decision.

In healthcare, information is life. And when that information is fragmented, the consequences ripple through every corner of the system — from clinical safety to financial health.

Data centralisation isn’t merely an upgrade; it’s a transformation.

By uniting data into a centralized database, healthcare organizations can:

Centralized data management transforms scattered systems into a synchronized network of knowledge — one that saves time, money, and ultimately, lives.

In an age where every second counts and every decision matters, data centralisation is not just about managing information — it’s about empowering action.

Because the true measure of a modern healthcare system isn’t how much data it holds, but how seamlessly that data connects people, purpose, and care.

The sports betting industry is evolving at a faster rate. Users’ expectations are constantly growing, warranting the need for technological support. Bettors today expect instant access to live odds and seamless transactions as quickly as possible. The need of the hour is a platform that never misses a pulse.

Robot Automation has proved to be a game-changer in online betting. It is capable of preventing a system glitch, which can result in the loss of valuable opportunities for both the operator and the bettor. Robot Automation confers betting platforms the required speed, accuracy and efficiency.

Robot Automation is the key to staying ahead in the competitive online betting market. Let’s explore how robot automation is transforming online betting and the benefits it offers.

Online sports betting is no longer just about placing bets. It’s a complex, high-stakes operation. Let’s look at the common challenges:

These pressure points make it clear: manual systems are no longer enough. Betting operators need tools that can think, act, and adapt faster than humans.

It’s essential to understand how betting bots operate before implementing robot automation.

Software programs designed to make informed decisions similar to humans are betting bots. They include:

These systems are modular. It means they adapt to different platforms and sports. Additionally, they are designed to be sneaky. They avoid getting detected using proxy rotation and randomised timing.

This automation upgrade helps users to scale their operations and eliminates the burden of manual effort.

Robot automation is changing the game by using software bots that combine real-time data with user-defined logic. They make decisions, take executive actions, and adapt to changing conditions. And all these without any human intervention.

Betting bots work similarly to trading bots in stock markets or crypto.

Here are the key benefits:

Robot automation isn’t just a tool! It’s a strategic advantage!!

Let’s look at a real example. Relu Consultancy tackled a major challenge in betting automation.

The problem: Betting platforms change frequently. UI updates, anti-bot measures, and CAPTCHAs make automation unstable.

Relu’s solution: They built a modular Chrome extension that automated betting across platforms like PS3838 and CoolBet.

Here’s how they did it:

The impact:

Relu’s case proves that robust, scalable automation is possible and already happening.

One of the most exciting aspects of robot automation is its easy accessibility.

Till yesterday, automation tools were under the dominance of developers and tech-savvy users. Today, platforms are designed with simplicity at their core. It has removed the need to write code or understand complex algorithms.

In the past, automation tools were reserved for developers and tech-savvy users. Today, platforms are designed with simplicity in mind. You don’t need to write code or understand complex algorithms.

Modern betting bots offer:

This shift empowers casual bettors, analysts, and small operators. It helps them compete with larger firms. Automation levels the playing field.

It’s not just about replacing manual work, it’s about unlocking new possibilities.

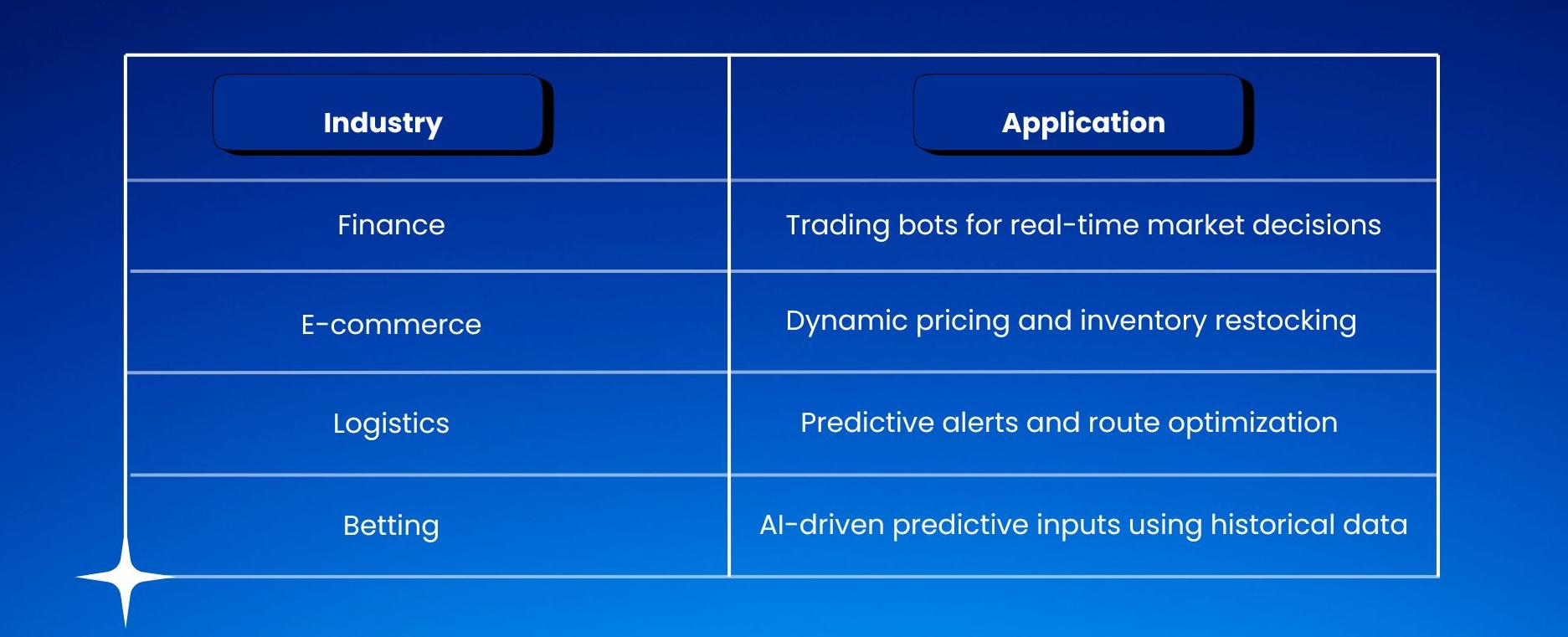

The power of automation goes beyond sports betting. Let’s explore how similar technologies are transforming other industries:

The future looks promising for betting automation. Let’s see how.

Betting bolts will become smarter, faster, and more accessible as technology continues to evolve.

If you wish to go with betting automation, choose a platform that supports bot integration. Define your betting logic clearly. Test your bot in a controlled environment before scaling.

Look for tools that offer:

Partner with experts like Relu Consultancy. They help you build a stable and scalable solution.

Automation hasn't remained only for tech-savvy users. With the right tools, anyone can benefit.

Robot automation in sports betting is no longer imaginative. It’s already happening and reshaping the industry.

Relu’s case study shows that real-world, scalable automation is achievable. Betting platforms adapting to this shift will gain a clear edge.

Here’s the key outcome: Automation isn't an option for industries needing speed and precision to scale revenue. It's mandatory.

If you’re still depending on manual workflows, it’s time to rethink your strategy. The future is automated. Are you ready to join it?

The fast-paced world of e-shopping automation has been the game changer. Businesses use automation to optimize operations from order processing to customer support. Product listing management is one area of automation that significantly impacts online e-commerce business. Automated product listing removes the role of manual data entry and offers error-free, seamless catalog updates.

At Relu Consultancy, we have worked with many e-commerce businesses to streamline their operations with custom automation solutions. Our expertise in automated product listing further supports businesses in terms of time, accuracy, and sales. Let’s explore the benefits of automated product listing and how Relu can help drive this automation smoothly.

Automated product listing is the process of automatically populating and updating the product catalog on an e-commerce platform. This system connects with inventory management, digital marketing tools, and pricing algorithms to ensure everything is accurate

This is done using spreadsheets or CSV files to upload multiple products simultaneously.

It includes integration of an API to fetch and update product details dynamically.

An automated sync process is implemented on platforms like Amazon, eBay, and Shopify.

Advanced automation tool with AI to optimize product descriptions, images, and keywords.

It is an old and evergreen process in which products are added individually after carefully screening and checking the details.

Manual product listing is time-consuming and prone to human errors. Automation support by Relu eliminates this need for repetitive data entry, reducing the risk of mistakes and ensuring that all the product details remain accurate across multiple channels.

Automated product listing ensures stock levels are updated in real-time. This prevents stockouts, overselling, and discrepancies between the e-commerce store and the warehouse. It also leads to better inventory control and customer satisfaction.

Automated systems optimize product descriptions and metadata to improve search engine performance. By using relevant keywords, structured data, and high-quality images, businesses can improve their online visibility and attract more customers.

Expanding to new marketplaces is easier than ever with automation. Businesses can quickly list their products on different platforms and reach a broader audience without requiring extensive manual input.

A well-maintained e-commerce platform has a catalog that offers a seamless experience. Automated listings ensure that product details, pricing, and images remain constant across all platforms. This improves trust and satisfaction among customers.

Product listing automation has been a game-changer for developing online businesses. By implementing automated product listings, enterprises get strong support to optimize their online presence and enhance customer experience. Various industries have benefited significantly from automated e-commerce listings. Below are some of the most beneficial industries that can leverage product listing automation for growth:

The retail sector is one of the biggest beneficiaries of product listing automation. Thousands of products, high customer demand, and stock updates will be handled seamlessly here. Moreover, the inclusion has simplified the inventory management process and improved online store operation.

The fashion industry operates differently and changes quickly with trends. Product listing automation helps fashion brands maintain their strong digital presence without the burden of manual listing management.

The fast-moving consumer food industry deals with a high volume of rapidly selling products. Automated product listing improves the efficiency of the process by managing frequent updates.

Automated product listing is crucial and time-saving for any e-commerce business. It improves efficiency, scalability, and accuracy. With automated e-commerce listing, businesses can effortlessly improve customer experience, inventory management, and expansion into new markets.

With Relu's expertise, specialize your business in custom automation solutions and explore the realm of growth in sales revenue with automated product listing.

Online shoppers today expect more than just a list of products - they prefer an online store that helps them find exactly what they're looking for. This is where dynamic product catalogs come in, transforming how e-commerce businesses connect products with customers.

By combining automation and technology with user-friendly design, these catalogs go beyond basic product displays and offer customers a seamless and engaging experience.

In this article, we'll explore the key features that make up dynamic product catalogs and how they benefit the e-commerce industry.

A product catalog shows everything customers need to know about your items, from descriptions and images to prices and specifications. In the digital world, these catalogs work like virtual storefronts, letting customers browse their entire selection from any device.

Unlike paper catalogs, digital versions offer interactive features that help shoppers find and learn about products more easily, making it simpler for them to decide what to buy.

A dynamic product catalog transforms your product display into an interactive shopping experience. So, when designing a catalog, make sure to include these essential features:

As online shopping continues to grow, dynamic product catalogs have become essential for e-commerce growth. Here's how these powerful tools can help your online store grow:

Today's online shoppers base their buying decisions heavily on product details. A Google Retail Study found that 85% of customers consider product information and images crucial when choosing where to shop. Without quality product information, you risk losing customers to competitors who provide better details.

A dynamic product catalog displays accurate, up-to-date information to create a smoother shopping experience. When products are properly indexed and searchable, customers find what they want faster.

The catalog can display personalised recommendations based on each shopper's behaviour, keeping them engaged and reducing the chances of them leaving your site without making a purchase.

Dynamic product catalogs improve both customer experience and your store's search engine rankings. These catalogs automatically handle product metadata updates - from descriptions and titles to categories.

This means your product information stays consistent across all pages, which search engines reward with better rankings.

Since the catalog optimises product tags and categories with relevant keywords, people searching for your products online can find your store more easily. And because these visitors are actively looking for what you sell, they're more likely to buy when they reach your site.

For instance, we helped Executive Advertising, a leading promotional products company, build a dynamic catalog. Our system ensures their extensive range of product information- from apparel to electronics is shown in a standardised way, making them easily discoverable online.

Dynamic product catalogs make it easier to boost e-commerce sales by showing customers relevant product recommendations at the right time. It uses customer data like browsing history and past purchases to suggest personalised customer recommendations.

When a customer views a product, the catalog automatically displays related items they might want to buy together, like suggesting a phone case when someone looks at smartphones.

For upselling, the catalog can smartly show premium versions of products customers are viewing. For example, if someone is looking at a basic coffee maker, they'll see higher-end models with extra features right next to them.

Dynamic product catalogs serve as a central hub for all your product information, making inventory management simpler and more accurate. When you update product details, stock levels, or pricing in one place, these changes reflect instantly across your entire online store, multiple sales channels, and internal systems.

This centralised approach helps your business in several ways:

Dynamic product catalogs represent a significant shift in e-commerce capabilities. By displaying product information in a more accessible, personalised, and engaging way, they help convert browsing into sales.

These catalogs enhance everything from customer experience and SEO rankings to inventory management and operational efficiency. Most importantly, they adapt to how people actually shop online.

For e-commerce businesses looking to grow, dynamic product catalogs aren't just a tool – they're an engine that drives sustainable growth through better customer experiences and smoother operations.

Partner with Relu Consultancy to get a catalog that optimises your product management and displays better revenue.

Managing your tasks and processes can get overwhelming, but the right automation tools can make things easier. With no code automation platforms, you don’t need to know how to code to create workflows that work for your team. Here are the key features to consider when choosing the best platform for your needs:

A good platform should be simple and easy to navigate. No code tools are designed for people who aren’t tech experts, so look for something with a drag-and-drop builder or a clear setup process.

For example, if you need to create a workflow for approvals or notifications, the platform should make it simple to connect tasks and set things up without frustration. Workflow tools with a straightforward design help you get started quickly and let you focus on your work instead of figuring out the system.

The best workflow automation tools connect with the apps your team already relies on. Whether you use email, spreadsheets, or project management tools, the platform should make it easy to link everything together.

For example, if your team uses Google Workspace or Microsoft 365, the platform should sync seamlessly with Gmail, Google Drive, or Teams. This way, your process automation tools can keep your tasks organized without extra manual work.

Starting from scratch can be intimidating, but platforms with pre-made templates make the process much simpler. These templates are pre-designed workflows for common tasks like sending reminders, managing approvals, or tracking progress.

For example, if you need a workflow to notify your team when a task is done, you can select a template, make a few adjustments, and have it ready in minutes. This feature is especially useful for beginners exploring no code automation for the first time.

Every business works differently, and your workflows should match your unique needs. The right workflow tools should let you customize workflows to fit your processes.

For example, you might need to route tasks to specific team members based on their roles or create workflows with multiple steps for larger projects. A flexible platform ensures your process automation tools can handle your team’s specific way of working.

Staying informed about what’s happening in your workflows is essential. A good platform should send notifications when tasks are completed, deadlines are approaching, or something needs your attention.

Tracking tools are equally important. Dashboards or reports showing which tasks are pending, completed, or delayed can help your team stay on top of their work. With reliable workflow automation tools, you can avoid bottlenecks and make sure everything stays on track.

As your team or workload expands, your automation tools should be able to keep up. Look for a platform that can handle more tasks, users, or workflows as your needs change.

For example, if your business takes on new clients or bigger projects, your workflows might need additional steps or more detailed tracking. A platform that grows with you ensures your no code tools stay useful over time.

When using process automation tools, keeping your data safe is crucial. Look for platforms that offer secure storage and protection for sensitive information, especially if your workflows involve client data or internal documents.

For example, if your workflows handle customer payments or private business information, security features like encrypted data storage can help you stay compliant and protect your reputation.

Even if a platform is easy to use, it’s always helpful to have access to support when you need it. Tutorials, FAQs, and customer service can make all the difference if you run into questions.

Some platforms also offer user communities where you can share tips, find inspiration, or troubleshoot issues. A supportive environment can help you get the most out of your workflow tools and discover new ways to improve your processes.

Not all businesses have the same budget, and the right workflow automation tools should provide a range of pricing plans. Look for platforms that offer flexible plans based on your team size and needs.

For example, a small business might start with a basic plan and upgrade as their requirements grow. Many platforms offer free trials, letting you explore their features before making a commitment.

In today’s fast-paced world, it’s helpful to manage workflows from anywhere. A platform with mobile access ensures you can check progress, approve tasks, or adjust workflows directly from your smartphone or tablet.

This is particularly useful for teams working remotely or for managers who need to stay connected even while traveling. Automation tools with mobile capabilities keep your workflows running smoothly, no matter where you are.

Finding the right platform for your workflows doesn’t have to be complicated. At Relu Consultancy, we specialize in helping businesses make the most of automation tools to manage their processes with ease. Whether you’re just starting with no code automation or looking for ways to improve your existing setup, we offer guidance to create workflows that match your needs.

Our expertise with no code tools, workflow automation tools, and process automation tools ensures you can connect your favorite apps, build custom workflows, and stay on top of your tasks without unnecessary stress. We’re here to help you organize and simplify your workflows, making your day-to-day work more manageable.

Ready to take control of your workflows? Get in touch with Relu Consultancy today, and let’s build tools that work for you.

Podcasting has evolved considerably since the term "podcast" was first coined by BBC journalist and The Guardian columnist Ben Hammersley nearly two decades ago in February 2004.

He combined the terms ‘iPod’ and ‘broadcast’ to define the growing medium. In 2005, Apple recognized the potential of podcasts and released iTunes 4.9, which integrated support for podcasts. Over the years, podcasts have become a popular form of media consumption.

32,158! That’s how many podcasts there are in India in 2024. Several factors have contributed to its growth, with AI being one of the biggest.

This blog will explore the current condition and future of podcasting in India.

India has become one of the biggest players in the global podcasting industry. So much so that the country’s podcast-listening market ranks third behind the US and China, with over 57.6 million listeners. Podcasts can be used for various purposes, including education, entertainment, business growth, etc.

Owing to its versatility, the podcasting-listening audience is expected to surpass 200 million listeners by 2025. However, several other reasons have contributed to the growth of podcasting in India. Some of these are convenience, the availability of diverse content, and increased accessibility.

Even the revenue of the Indian podcasting Industry is expected to reach $3,272.6 million by 2030, compared to $470.3 million in 2023.

Here are the key AI-driven trends that are shaping the podcasting industry:

There are several ways in which AI is driving revenue growth in the podcasting industry, with automation being one of the most effective ways. AI allows you to automate different aspects of podcast production, from editing to transcription and content recommendation. With AI, you can cut down on the time and resources needed to create podcasts. Reaching a wider audience has become easy through AI-optimized distribution strategies.

You can even personalize content based on listener data, increasing engagement and the potential to monetize your podcasts. Optimizing ad targeting and delivery with AI can help you attract more advertisers and command higher ad rates. Eventually, you can better align with brands for sponsorship opportunities with AI-driven insights about audience demographics.

If creating podcasts is a part of your business marketing strategy, you must use AI-powered podcast solutions for several reasons.

Let’s look at some common challenges and ethical concerns accompanying AI podcasting.

Here are some common ethical practices and mitigating strategies you can adopt to address these challenges:

The future of podcasts in India is closely tied to AI-driven solutions, and it looks quite promising due to the significant growth the market is expected to experience. There are a number of reasons behind this rise, including diverse content in regional languages, increasing listenership, and a growing demand for on-demand audio content. This will position India as one of the fastest-growing podcasting markets worldwide. There are predictions for a larger listener base and substantial revenue generation in the coming years.

The podcasting industry in India is at a pivotal moment, as AI is revolutionizing the way podcasts are created, distributed, and monetized. More podcasters are leveraging AI tools for automation, streamlining workflows, and enhancing efficiency. With advanced AI algorithms providing personalized recommendations, podcasters can now prioritize maintaining a deep level of engagement with their target audience over just reaching them.

The impact of AI on the podcasting industry will extend far beyond improving quality, reducing costs, and saving time. It will open new revenue streams and unlock limitless opportunities for growth and innovation.

Getting the right data about films, content preferences, and market trends is crucial for making informed decisions in the entertainment and media business. But with information scattered across countless websites, collecting it manually isn't practical.

AI-driven web scraping offers a smart solution that automates how we gather and organize online film data.

In this article, we'll look at what AI scraping is and how it can benefit professionals working in film and media.

AI data scraping combines artificial intelligence and machine learning to gather large datasets from the internet in a more efficient manner.

This advanced approach identifies, extracts, and organizes relevant data from websites by recognizing specific patterns in HTML structures or through API connections. The collected information, which includes text, images, videos, or metadata, is then systematically stored in databases or spreadsheets for further analysis.

As the entertainment industry becomes increasingly data-driven, AI data scraping offers several key advantages for film analytics:

AI data scraping has become essential for film industry professionals, from marketing teams to content creators. Here's how it helps across different areas:

Emotions influence decisions in the entertainment industry, making sentiment analysis an essential tool for industry professionals. They help filmmakers, writers, and others in the industry understand how viewers feel about their content.

With AI-driven web scraping, you can collect viewer opinions and reactions from diverse sources, including review websites and social media platforms.

By analyzing this audience feedback, you can identify what elements of your content connect most strongly with audiences. These insights help guide your creative and marketing strategies as well as future content development.

AI-driven scraping provides crucial insights into both theatrical and streaming performance metrics. It helps to extract and organize box office data on different films, making it easy to compare their success based on factors like genre, budget, and release strategy.

While it can be easy to assume which films might go hit, this data-driven approach often reveals unexpected trends about which genre will succeed financially.

Additionally, scrapers can gather valuable viewership data from streaming platforms focusing on audience preferences and consumption patterns across different distribution channels. Such comprehensive insights enable producers and investors to make more informed decisions about future projects and optimize their distribution strategies.

AI web scraping enables real-time monitoring of competitor activities in the film industry. It helps to scrape data on content performance, release strategies, and audience engagement. By analyzing this information, you can spot what your competitors are doing well, where they're falling short, and what gaps exist in the market.

With AI data extraction, you can also process genre preferences, demographic profiles, and cultural influences to identify emerging patterns and unmet needs like underrepresented genres.

As a film writer, gathering information about movies- from themes and character details to cast, crew, and production specifics is an essential part of your work. Web scraping tools can automatically gather these details from various websites and organize them in a structured format.

These tools give you access to both current details and historical content like past reviews, articles, and profiles that you can use as references in your writing.

Custom web scrapers take things a step further by extracting specific types of film information based on your needs. For example, if you're researching films centered around disability themes, the scraper can be configured to focus on films that match this criterion, making the data collection process more targeted.

AI scraper enables you to identify the most effective channels and audience segments by analyzing massive volumes of data across platforms.

By gathering data from social media (Facebook, Instagram, Twitter) and video platforms (YouTube, TikTok), marketers can see where target audiences engage most actively with content. This insight helps you allocate budgets to platforms that generate higher engagement, shares, and trailer reviews.

AI-driven tools also analyse demographics, behaviors, and interests from various platforms. This helps you tailor your marketing campaigns for specific groups like sci-fi enthusiasts for your upcoming space movie.

The film industry generates massive amounts of data across streaming platforms, theaters, and review sites daily. This is where AI data scraping changes everything.

Instead of drowning in spreadsheets and tabs, imagine having a smart system that automatically gathers exactly what you need.

At Relu Consultancy, we build custom scraping tools that work for your specific needs. Our tools grab the data you actually care about, so you can focus on making the decisions that matter.

Looking to make smarter, data-backed moves in the film industry? Our custom AI tools can help you get there.

Today's real estate decisions need more than just market knowledge and instinct - they need solid data. From daily property listings to housing market trends, there's valuable information hidden across different real estate platforms.

Data scraping helps you capture this information automatically and turn it into insights you can use. Whether you're an agent seeking the best properties for clients or an investor hunting for opportunities, real estate data scraping gives you a competitive edge in the market.

In this guide, we'll walk you through what real estate data scraping means, how it benefits your business, and the different ways to collect the data you need.

Data scraping uses software and bots to gather data from websites automatically. When a scraper requests information from a website server, it receives the requested page and then pulls out specific details like news, prices, or contact information.

In real estate, data scraping means collecting information from property websites, listings, and public records.

Using scraping tools, you can collect data from real estate sites like Realtor.com, Zillow, and government databases to understand market patterns, prices, and lucrative investments. Here's what real estate data scraping offers you:

This covers the essential details related to the property, such as its address and type, whether it is a single-family home, multi-family unit, condo or apartment.

Real estate data scraping also helps you find out the construction year, total square footage, and interior details like bedroom count, bathroom count, living spaces, and kitchen features. Its exterior elements including garage spaces, swimming pools, and other outdoor amenities.

The dataset shows each property's listing price - the initial amount set by sellers when their properties enter the market. It also offers information on price per square foot if it is available on the platform.

You can track the property's complete price history, including previous listing prices, price reductions, and increases over time.

Real estate data scraping reveals details about agents involved in property transactions. You can access agents' contact information, including names, phone numbers, email addresses, and office locations when they are listed. It also sheds light on agent ratings and reviews from past clients.

This data includes insurance details, loan history, and mortgage records of properties. You can also access area-specific information like average family income, demographic surveys, school ratings, and local crime rates. Additional records cover property tax assessments, current zoning laws, and building permit histories.

Here's how data scraping provides vital advantages in the real estate industry:

Real estate data scraping helps you spot market changes before they happen and plan your next steps. By gathering data on property prices, sales numbers, and mortgage rates over time, you can identify patterns and get a clearer picture of whether the market is headed.

Web scraping also tracks websites continuously, bringing in fresh data throughout the day - whether that's every few minutes, hours, or days, depending on what you need. Having this up-to-date information allows you to make decisions proactively, change your investment approach, adjust your property holdings, or choose the right time to buy and sell.

When listing a property, deciding on the right price is a complicated task that comprises a thorough real estate data analysis.

Data scraping pulls together details from many sources about similar properties in the area – everything from square footage and room count to amenities and market values. This complete picture helps you set fair prices that work for both sellers and buyers by showing you how similar properties are valued.

You can create a competitive pricing model, whether that involves going lower to get more buyer interest or higher to match the property's features and local demand.

Keeping an eye out for your competitors is essential to strategically positioning your business and attracting buyers. Through real estate data extraction, you can monitor competitor listings, pricing strategies, marketing campaigns, and customer reviews.

By scraping data from competitor websites, you see what works and what doesn't in their business. For example, if scraped data shows competitors' properties are selling quickly at higher prices, you might focus on investing or adjusting your prices there.

By pulling information from sites with reviews, rental listings, and occupancy rates, you can understand what properties are in demand. This data reveals current market rental values, tenant preferences, and property trends.

Such insights can help you make informed decisions about your rental portfolios to improve tenant satisfaction and reduce vacancy rates.

For instance, if your property data extraction shows a high demand for furnished units or pet-friendly properties in your area, you can adjust your offerings to match.

Understanding buyer preferences and behaviour is vital for tailoring marketing strategies and enhancing customer experience. Real estate data extraction can provide insights into what buyers value, like:

By analyzing these insights, you can better understand and provide what your buyers are looking for. This focused approach not only improves your closing rates but also creates better customer experiences by matching their exact needs.

Real estate data scraping helps identify active leads and potential clients. By monitoring forums, social media, and feedback sites, real estate businesses can find people who are seriously considering property transactions.

You can track their locations, preferences, and discussions to build detailed buyer profiles that make your lead generation more effective.

Real estate scraping tools eliminate manual data collection. Instead of agents spending hours searching different websites for property listings, housing market trends, and demographic details, these tools pull all information within seconds. This saves valuable time you can use for more important tasks like client meetings and deal negotiations.

These tools also remove human error risks by automatically collecting and organizing property data from multiple sources into structured spreadsheets for analysis.

Extracting real estate data helps spot profitable investment opportunities. By pulling data from different real estate platforms, you can find undervalued properties or up-and-coming neighbourhoods. This foresight can help identify areas likely to give good returns, leading to smarter investment choices and minimizing risks.

Developing your own real estate data scraper gives you complete control over data collection and processing methods.

While it requires technical expertise and regular maintenance, partnering with an agency like Relu Consultancy can get you a tailored data scraping solution that delivers exactly what your business needs.

You can tailor the scraper to extract data points specific to your niche, such as rental trends or luxury properties. As your business grows, you can adapt the tool, adding more features or targeting new markets.

Pre-built web scrapers let both technical and non-technical users pull data from any real estate website. These services handle complex issues like anti-scraping measures and proxy management automatically.

However, they may offer less flexibility and scaling options compared to custom-coded solutions. Pre-built web scraping tools come in three types:

Scraping APIs provide a direct way to collect data from websites that support this technology. These are pre-built interfaces that let you request and receive specific data from websites without needing to scrape their pages directly.

Instead of dealing with webpage structures and HTML, the method pulls data straight from the website's API, making the process more reliable and easier to manage.

By extracting and analyzing property data, you gain critical insights into market trends, property values, customer preferences, and competitor strategies. These insights help optimize everything from pricing and property management to lead generation and customer experience.

While data scraping offers powerful advantages, it's crucial to use it ethically and responsibly, following legal guidelines and respecting privacy standards. When implemented correctly, it becomes a key differentiator for real estate businesses.

Want to access data-driven insights for your real estate business? At Relu Consultancy, we create custom real estate data scraping solutions that deliver the comprehensive, real-time data you need to stay competitive in today's market.

Operational efficiency is essential for all businesses to succeed. Low code and no code workflow automation can help your business streamline operations and reduce repetitive tasks. Your employees feel empowered even when they have minimal technical expertise. Moreover, even non-developers can create complex workflows easily by using pre-built templates, real-time integrations, and user-friendly drag-and-drop features.

There are several reasons for businesses across different industries to use workflow automation, with increased productivity, fewer errors, and less reliance on IT teams being the most common ones.

Let’s find out how you can use workflow automation to your advantage, innovate, and stay competitive.

Low code or no code workflow automation tools enable you to create and automate tasks. Do you know what’s the best part about using no code automation? It’s as the name suggests. You don’t have to worry about writing long lines of code. Instead, you can either use pre-built templates or customize them to suit your needs.

You can also use drag-and-drop interfaces on workflow automation tools and software to quickly design and deploy workflows with little to no coding experience required. This enables you to automate, optimize, and streamline repetitive manual tasks, making processes smoother and more efficient. Low code automation, on the other hand, may require some coding to automate business processes.

Let’s look at some of the major benefits you can enjoy with low-code and no-code workflow automation.

Here are the major use cases of low code no code platforms for workflow automation.

Here are some factors you should consider when choosing a workflow automation tool.

Despite several benefits, no code workflow automation tools come with some challenges.

Low code and no code workflow automation is essential for your business to move forward as it breaks down barriers to technology. With workflow automation, your team can focus on more value-driven and strategic instead of manual processes that consume a lot of time. You can achieve improved accuracy, huge cost savings, and enhanced collaboration. Workflow automation tools are likely to get more advanced in the future, with AI-powered functionalities and integrations. If you want to achieve long-term success, workflow automation tools are indispensable because they can change the way you approach operational efficiency.

Drastically elevate your efficiency with Relu Consultancy’s process and no-code automation services. Cut out complexity and drive growth with our effective automation solutions. Get in touch with us to learn more!

In today’s business world, staying ahead isn’t just about having great products or services. It’s about knowing what your competitors are doing, keeping an eye on trends, and making smart decisions based on real information. Data is an indispensable tool. That’s where web crawling comes in—it’s a tool that helps businesses collect important data from all over the internet without needing to spend hours manually searching for it.

Let’s look at how web crawling can help with competitive analysis and market research, making it easier for businesses to stay in the loop.

Think of web crawling as sending out a digital assistant to explore the internet for you. These “crawlers” visit different websites, grab useful public data, and bring it back to you. For your business, this means you can easily track what competitors are up to, understand market trends, and get insights to make better decisions—all without constantly visiting websites yourself.

Web crawling works by systematically scanning pages on websites, following links, and collecting data like product prices, customer reviews, or blog posts. The best part is that this happens continuously in the background, giving you updated information whenever you need it. Whether it’s tracking a competitor’s pricing or gathering customer feedback, a web crawler online makes the data collection process simple and hands-off.

Keeping an eye on what your competitors are doing can be time-consuming, but web crawling makes it simple. Here’s how it works:

Web crawling isn’t just about watching competitors—it’s also a great tool for keeping up with your market. Here’s how businesses use it:

This data can help your business make smart, well-informed decisions and spot opportunities for growth. Web crawling offers a complete view of the market, helping businesses to stay flexible and responsive.

Web crawling can collect a variety of data that can be useful for both competitive analysis and market research, such as:

This data allows businesses to make informed, timely decisions that can boost their competitive edge.

Web crawling isn’t as complicated as it sounds. In fact, there are several tools available that make it easy for businesses to get started. Some of the popular options include user-friendly platforms that require little technical knowledge. These tools allow you to track competitors, gather market insights, and even crawl websites for keywords to keep your SEO strategy sharp.

Of course, web crawling isn’t without its challenges. Potential problems to be mindful of include:

Despite these challenges, with the right approach and tools, businesses can still gather significant amounts of useful data without running into too many roadblocks.

Web crawling is becoming more important as businesses shift toward more data-driven strategies. As technology evolves, web crawling will continue to offer businesses faster, more effective ways to gather insights. Whether it’s tracking competitors or exploring new market opportunities, web crawling will remain a vital tool in helping companies stay competitive.

Here’s how different industries can leverage web crawling: